|

|

|

|

|

|

|

|

Machine learning driven image-based controllers allow robotic systems to take intelligent actions based on the visual feedback from their environment. Understanding when these controllers might lead to system safety violations is important for their integration in safety-critical applications and engineering corrective safety measures for the system. Existing methods leverage simulation-based testing (or falsification) to find the failures of vision-based controllers, i.e., the visual inputs that lead to closed-loop safety violations. However, these techniques do not scale well to the scenarios involving high-dimensional and complex visual inputs, such as RGB images. In this work, we cast the problem of finding closed-loop vision failures as a Hamilton-Jacobi (HJ) reachability problem. Our approach blends simulation-based analysis with HJ reachability methods to compute an approximation of the backward reachable tube (BRT) of the system, i.e., the set of unsafe states for the system under vision-based controllers. Utilizing the BRT, we can tractably and systematically find the system states and corresponding visual inputs that lead to closed-loop failures. These visual inputs can be subsequently analyzed to find the input characteristics that might have caused the failure. Besides its scalability to high-dimensional visual inputs, an explicit computation of BRT allows the proposed approach to capture non-trivial system failures that are difficult to expose via random simulations. We demonstrate our framework on two case studies involving an RGB image-based neural network controller for (a) autonomous indoor navigation, and (b) autonomous aircraft taxiing. |

|

Despite their widespread succes in robotics, vision-based controllers can fall prey to issues when they are subjected to inputs that are scarcely encountered in the training dataset or are outside the training distribution. Thus, to successfully adopt vision-based neural network controllers in safety-critical applications, it is vital to analyze them and understand when and why they result in a system failure. |

|

|

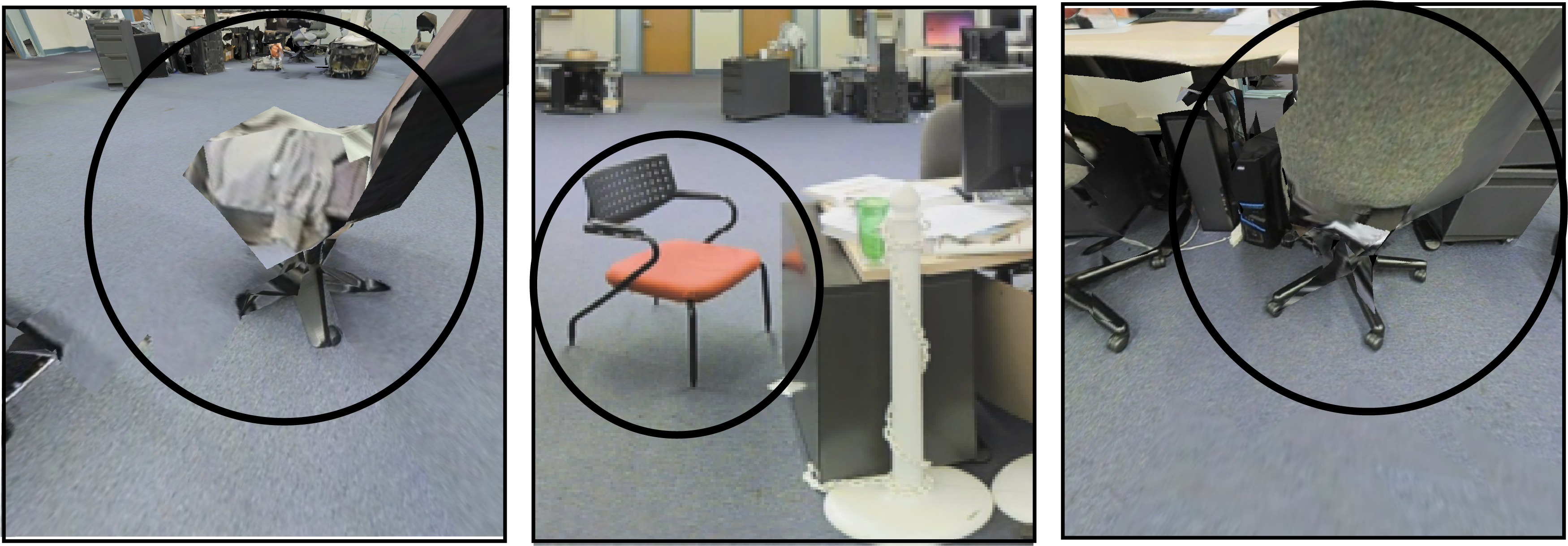

Images from the same dataset, containing 3 completely different styles of chair. Even such subtle differences could result in out of distribution error. If the training data does not have a rich distribution of features, the learned visual-policy may overfit without generalizing to novel datapoints. Our proposed method aims to capture such images, that could lead to the failure of the visual-controllers, in a sample-efficient manner. |

|

In this work, we cast the problem of finding closed-loop vision failures as a Hamilton-Jacobi (HJ) reachability problem and compute the Backward Reachable Tube (BRT) of the system. Given a set of unsafe states (e.g., obstacles for a navigation robot), the BRT is the set of all starting states of the system which ultimately reach an unsafe state under the vision-based controller. The sequences of visual inputs corresponding to the states in the BRT can be, therefore, classified as the inputs that result in closed-loop system failures, enabling us to discover failures in a systematic manner. |

|

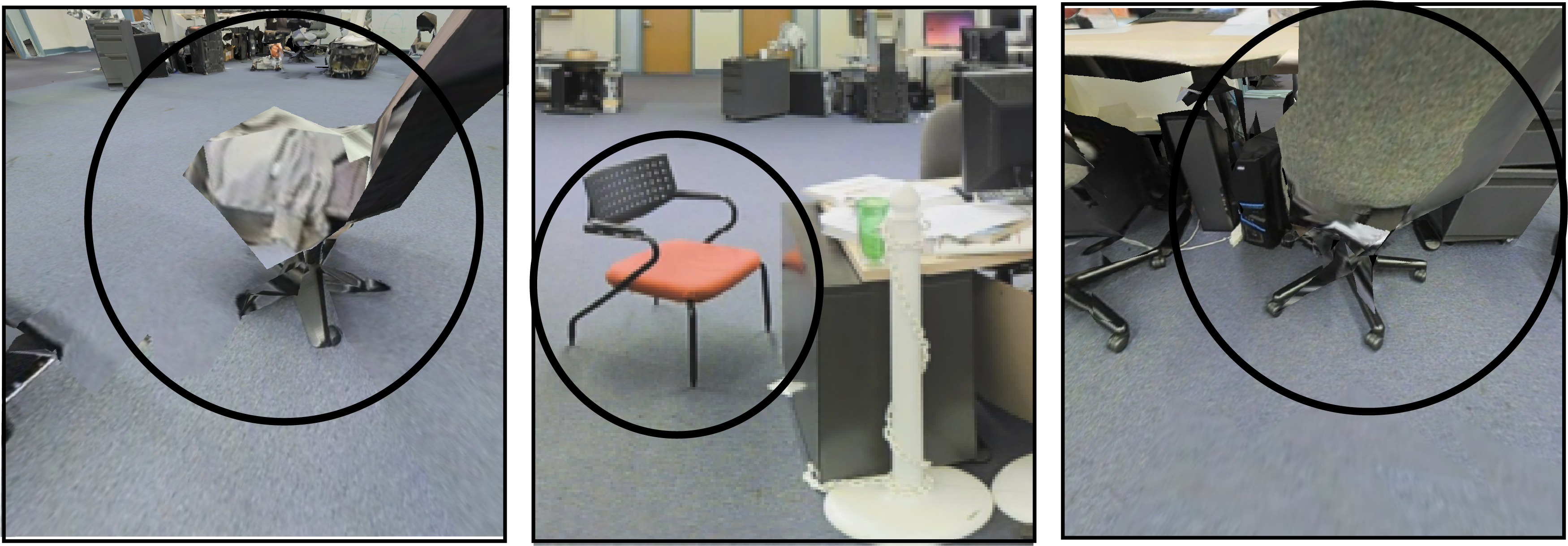

In the first case study, we present an aircraft taxiing problem. The goal of the aircraft is to follow the centreline of the runway as closely as possible using the images obtained through a camera mounted on its right wing. For this purpose, the aircraft uses a Convolutional Neural Network (CNN) along with a proportional controller return the control input (change in heading angle). We compute the BRT that captures all possible unsafe states of the system. As a simple example (shown below), we start the aircraft from a state in the BRT and one outside it. Even though these states start from the same position, the BRT predicts that changing the initial heading of the aircraft is enough to alter the the visual controller's ability to accomplish the taxiing task. This allows us to visually detect and analyze failure cases of the controller in a systematic manner. |

|

|

(Center) The BRT of the system (grey area) shows all possible states starting from which the aircraft fails to accomplish the taxiing task. The aircraft staring from the red starred state contained within the BRT is simulated to the left while the green started state not enclosed in the BRT is simulated in the right. Determination of failures over high high-dimensional inputs such as RGB images is made into a visually intuitive procedure by the BRT. |

|

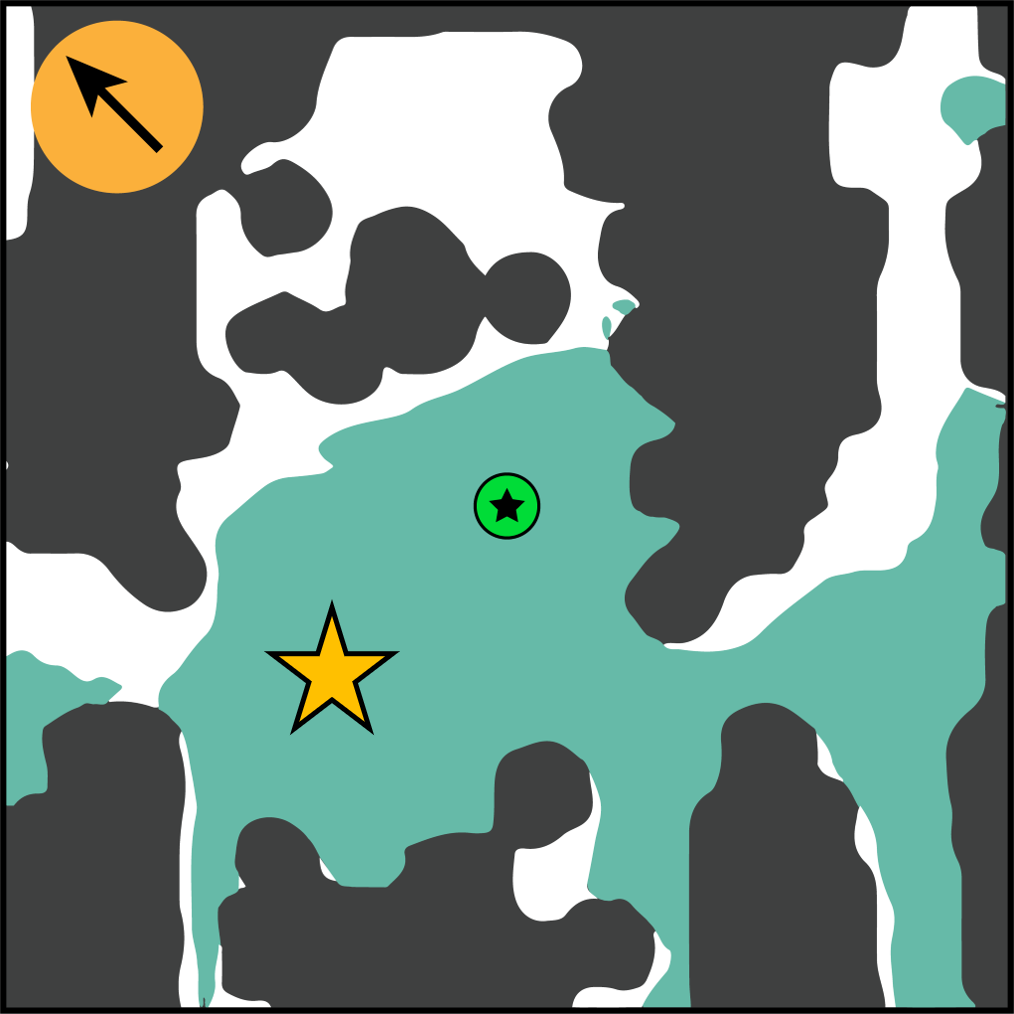

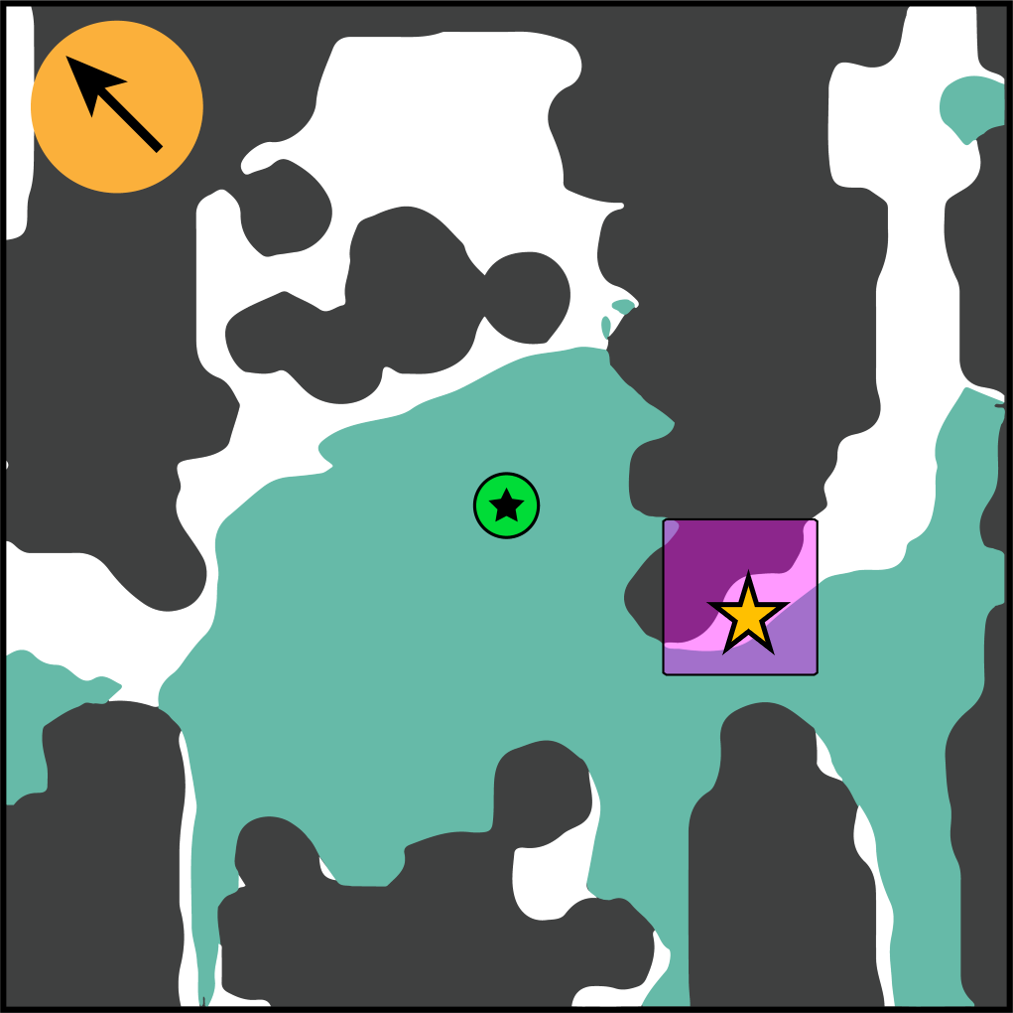

Our second case study analyzes a state-of-the-art visual navigation controller for a wheeled robot navigating an unknown indoor environment. For detecting vison failures of this system, we compute it's Backward Reach-Avoid Tube (BRAT) -- the set of states from which the robot reaches its goal without colliding with any obstacles (see the left most image in the animation below. The teal region shows the states included in the BRAT). A state simulated from the BRAT shows the robot successfully reaching the goal (marked with the green circle). |

|

|

|

(Left) Ground truth occupancy map over an area of an office space. The obstacles are shown in dark grey, the free space is shown in white, and the goal area is shown in green. The BRAT slice is shown in teal for θ = 135o. We start the robot from one of the states in the BRAT (marked with the yellow star). (Center) Notice the top view trajectory of the robot as it successfully makes its way to the goal, while predicting waypoints (red marker in the center animation) (Right) The steam of monocular RGB images that the robot sees at every state. |

|

The BRAT presents us with a visually intuitive way to determine the states from which the RGB controller fails to take the robot to its goal. We analyze 3 different failure modes of the visual-controller in the paper. One such interesting failure case was the inabilty of the controller to discern the traveribility of narrow passages . As seen in the animation below, the controller is unable to discern that the gap between the chair and the table is insufficient for the robot to pass through. Such failures also indicate the shortcomings of the choice of sensor for the system. For example, we hypothesize that adding another layer of depth estimation to the inputs could alleviate these failure modes, as it will provide the robot with better traversability information. Targeted training of CNN near the narrow passages might also improve the robot performance in such scenarios. |

|

|

|

(Left) The magenta square shows the states that are not covered by the BRAT. (Center) Simulating the trajectory from one such state shows that the controller is predicting waypoints through an area that the robot cannot traverse. (Right) The images seen by the robot shows that the controller leading it towards the space between the chair and the table even though it does not possess enough clearance, eventually leading to a collision. |

AcknowledgementsThis webpage template was borrowed from some colorful folks. |